Data Centers

21 Nov, 2019

Data centers across the country are shifting into an accelerated growth mode that affects nearly every business on the planet.

Corporations have seized on the opportunity presented to them by access and accumulation of more data to provide goods and services faster, to more remote areas, with greater depth of resources and a better base of customer information than ever before.

Smart phones are compiling data on consumer demands every hour of every day, whether or not the consumer knows it, and transmitting that data to businesses to set up and manage customer profiles of nearly everyone who has ever used a smart phone to purchase anything.

One example of the reach of digital information gathering is in cattle farming. As reported in The Economist, one Irish company uses computer vision to track cows in barns and fields and alert farmers if a cow is not feeding when it should be, or moving in a way that suggests it might be sick.

There is also discussion about putting sensors inside cows that would monitor body temperature, movement and stomach acidity for the life of the cow, periodically uploading the results to the farmer.

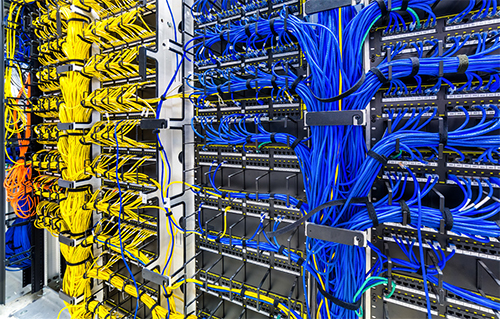

Handling all of that information, and using it for the competitive edge it brings to any business, has been a matter of expanding not only what data centers can do but how they do it.

THE DEVELOPMENT OF MORE DIGITIZATION

An article in the Wall Street Journal reported that the number of data centers worldwide that are owned and operated by cloud service providers, data-center landlords and other technology firms rose to roughly 9,100 this year from 7,500 last year. That number is expected to go to 10,000 by 2020.

But that is just the tip of the iceberg.

A white paper from International Data Corporation (IDC) released in November, 2018, “The Digitization of the World,” discussed the development of IDC’s DATCON (DATa readiness CONdition) index, and examined four industries as part of its DATCON analysis: financial services, manufacturing, healthcare, and media and entertainment.

The paper concluded that manufacturing and financial services are the leading industries in terms of maturity and usage of digital data resources, with media and entertainment most in need of a jump start.

Healthcare is primed to grow faster than the rest as a result of more available healthcare analytics, increasing the frequency and resolution of magnetic resonance imaging (MRIs), and other image and video-related data being captured in today’s advanced modes of medical care.

The IDC paper defined three primary locations where digitization is happening and where digital content is created: the core (traditional and cloud datacenters), the edge (enterprise-hardened infrastructure like cell towers and branch offices), and the endpoints (PCs, smart phones, and IoT devices), with these locations creating 175 zettabytes of data by 2025 (1 zettabyte is equal to a billion terabytes, or a trillion gigabytes).

IDC forecasts that more than 150 billion devices will be connected across the globe by 2025, most of which will be creating data in real time. For example, automated machines on a manufacturing floor rely on real-time data for process control and improvement.

DATA CENTER DEALS

The trend is for businesses to not own and operate their own data centers, preferring to shift workloads to cloud providers or use colocation facilities to house their IT infrastructure, resulting in more traditional data centers being put up for sale.

New data from Synergy Research Group, a market intelligence and analytics company for the networking and telecoms industry, shows that 52 data center-oriented merger and acquisition deals closed in the first half of 2019, up 18 percent from the first half of 2018 and continuing a strong growth trend seen over the last four years.

Since the beginning of 2015, Synergy identified well over 300 closed deals valued over $65 billion, with Equinix and Digital Realty, the world’s two leading colocation providers, leading the pack and accounting for 36 percent of total deal value over the period.

Synergy reports that other notable data center operators who have been serial acquirers include CyrusOne, Iron Mountain, Digital Bridge/DataBank, NTT and Carter Validus.

One growing hotspot for data center development is in and around the greater Washington, D.C. metro area, where Amazon will be locating their second headquarters. For example, Loudoun County, Virginia, touting itself as the “world’s largest concentration of data centers,” has 13.5 million square feet of data centers operating now, with another 4.5 million square feet under development.

Google will spend $600 million to develop new data centers in the Arcola Center development, a planned mixed-use development in Loudoun County, and on 57 acres in the Stonewall Business Park in the county, bringing the company’s total investment in the state to $1.2 billion.

LEADING DATA CENTER DEVELOPMENTS

Jeannifer Cooke, research director for cloud to edge data center trends for IDC, focuses on managing data centers.

She says that, because of the shift to a much more predictive and proactive way of doing things using more and more data – such as data collected and used to make real-time adjustments to production on the manufacturing floor, for example – some datacenters are lagging behind in how they manage themselves. “What we are seeing now is that there are not the right people with the right skill set in data centers, and we are seeing the emergence of smarter data center technology that helps view and manage things in a more efficient way to plan better for more capacity better,” Cooke says.

She says that the data centers now are overprovisioned on power as a matter of prudent operations, but are still seeking how they need to right-size and modernize to meet the needs of business. “That is where we are seeing new services and new technologies in what we call an IDC techscape, a worldwide IT service management concept, to make things more efficient to allow you to manage remotely.”

The cyber security part of cloud use is always present, because getting hacked is not a question of if but when and who is responsible, she says. “It’s been a long-standing challenge in the industry about who has access to data,” Cooke says. “It’s a challenge wherever the data resides. It’s about new skillsets that need to be developed because of this hybrid cloud or multi-cloud world. We find a lot of organizations looking to have other providers or consultants come in to help them through that because the stakes are so high. Physical security becomes an issue as well.”

There is a lot going on in the cooling technologies for data centers, she says, managed through artificial intelligences (AI) solutions. “There are some interesting uses of artificial intelligence that takes all these massive numbers of data points gathered from the sensors of every rack, gathering data every 15 minutes for months or years, and drawing conclusions of what is happening within that environment, and then making recommendations about solutions.”

One of those AI companies is Deep Minds, which has done projects that saved 30 percent of energy used to keep data centers cool, among many other uses of AI. The company joined forces with Google in 2014 to accelerate their work.

Another company that recently joined with Google on data centers solutions is Cisco. The two companies have been working together for the last two years, co-innovating a hybrid cloud architecture that empowers customers to innovate on their own.

The integration between Google Cloud’s Anthos and Cisco data center technologies – which includes Cisco HyperFlex, ACI, SD-WAN and Stealthwatch Cloud – allows customers to seamlessly move workloads and applications across clouds and on-premise environments.

Ken Spear, a marketing manager for hybrid cloud solutions at Cisco, says that they will be implementing new capabilities and solutions as a result of that collaboration this fall.

One customer that Cisco and Google are working with wants to use the public cloud and deploy to remote locations when they introduce new applications. “They want all of this flexibility but they also want consistency and security and other things that they are used to having in their data center,” Spear says. “They said that even as they are delivering new services, they want that environment to have that consistency, and not have to do some sort of trial and error themselves.”

He says that infrastructure is another area where AI is being applied. Data centers are early adopters of AI. “We have a product that uses artificial intelligence in order to look at ways to optimize the way that workloads are employed, whether its about power consumption or trying to address certain peaks that they have, and only use the cloud when they need to address those peaks,” Spear says. “They have to be more responsive to the business and at the same time keep costs down. You can take AI and apply that to solve that, to analyze that and predict that to mitigate the costs and the risks in the process.”

THE SELF-ANALYZING BRAIN TO COME

Intel recently released a document reviewing the state of data centers, with a focus on what they call software-defined infrastructure (SDI) as the next great leap in development. “Whereas some data centers only utilize around 50 percent of their capacity, an SDI data center uses intelligent telemetry to automate infrastructure provisioning and optimize resources dynamically across the data center, scale them as needed, and recover from failures.

“Essentially, the data center becomes a self-analyzing brain, built on an open infrastructure platform that delivers the services that can grow your business with greater efficiency and lower costs.”